Mixed Reality

a physically interactive Augmented Experience

Role:

Product designer, Unity developer, XR interaction design, level design

Team:

Solo project

Duration:

2 months from start to finished product

01

Project Origination:

Background, requirements, design medium, and initial product ideation tests

Background

The basis for this project was simple. Choose a particular industry, and create a product with some perceived value to that industry in either a virtual or augmented reality format. I immediately chose AR as my preferred design medium, which, as you’ll see, later morphed into a mixed-reality experience. For numerous reasons, I feel that AR is the more exciting, practical, and viable extended reality platform for the future, especially once unobtrusive wearable technology becomes more widespread. While given the option of virtually any development platform, I chose to create this experience in Unity with the aid of Vuforia image targets and Visual Studio for scripts.

Experimental Ideation

Initially and without knowing the final direction for the project, I elected to create several image targets and began experimenting. Alongside multiple rigid flat targets, some based on everyday items, I also created a few 3-D targets with potentially interesting use cases, including a cube and cylindrical target. Although exciting in principle, the acquisition and tracking of the 3-D targets proved unreliable during testing. Focusing instead on the more reliable 2-D targets produced several ideas for a game-like experience. An idea for a portable chess game using playing cards to render virtual chess pieces revealed a problem where more than 8-10 simultaneous image targets quickly overwhelmed the tracking system. Another design experiment featured a rocket that could be flown around the room with a controller to collect objects. However, while entertaining, it was difficult to justify its existence as an AR product beyond basic novelty.

02

Innovation:

A novel interaction, opportunistic control scheme, design logistics and obstacles

Design Concept

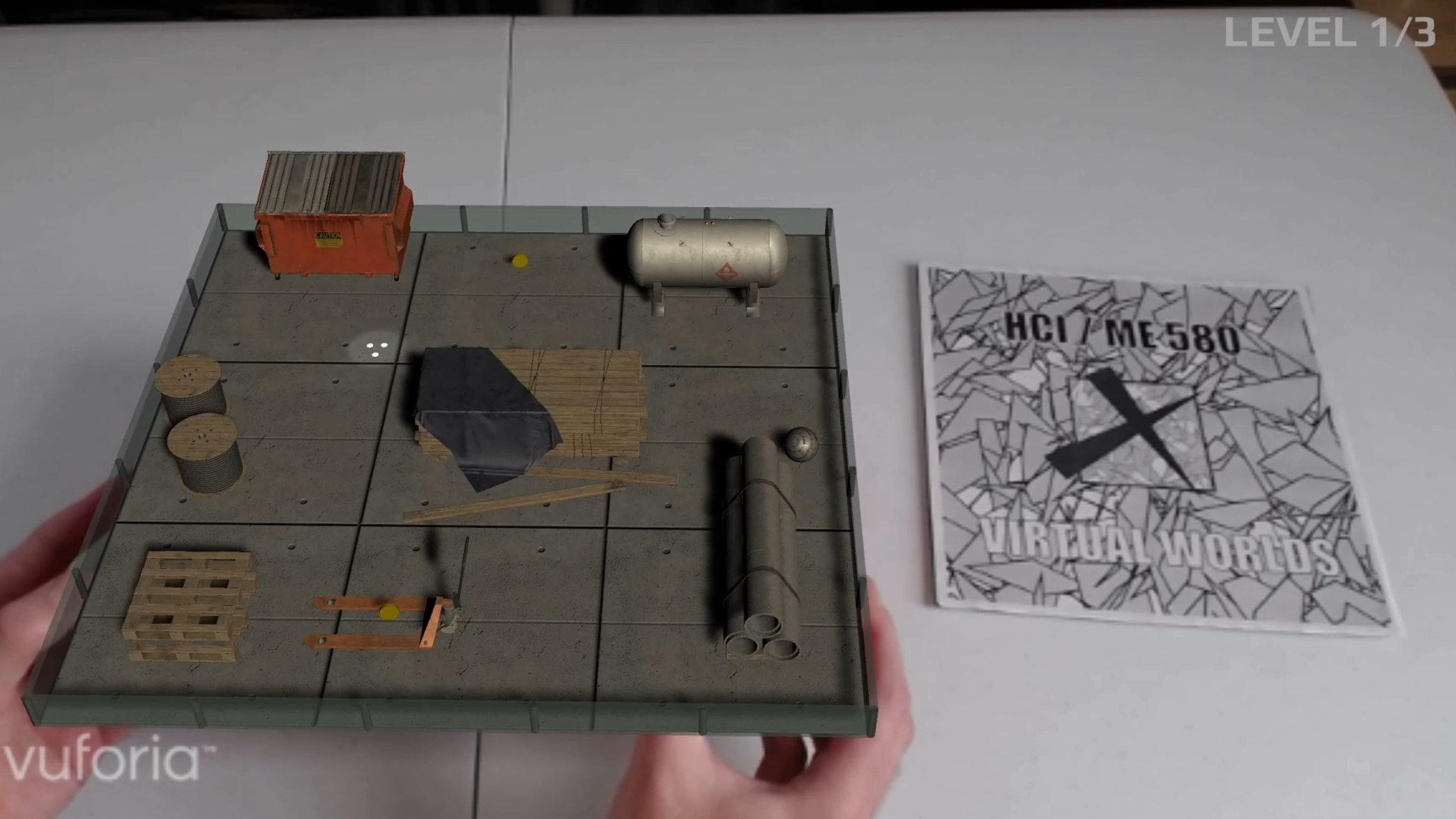

Back at the drawing board, I finally stumbled on the concept I would eventually move forward with while thinking about games from my childhood. I recalled the old wooden labyrinth maze games in which tilting the board surface moved a small metal ball through obstacles. I adapted this idea to a mixed-reality experience whereby a rigid image target (in this case, a cereal box) would be used as a physical interface to control a virtual ball by tilting it to guide its movement. Ideally, there would be multiple levels and a series of collectible items for the player to find in order to advance. I envisioned the game as a potential marketing tool and modern-day replacement for the cereal box prizes that were once commonplace.

Improved Immersion

The physical interaction in most AR games seems to be limited to clicking or flicking on-screen buttons and objects as the primary form of user input, so the idea of tilting a physical target to manipulate player movement was instantly appealing to me. A study by Henderson et al.(2008) described these types of interaction techniques as “Opportunistic Controls” whereby “a tangible user interface leverages naturally occurring, tactilely interesting, and otherwise unused affordances…already present in the domain environment.” Furthermore, Henderson’s study found that the implementation of opportunistic controls supported faster completion times in an augmented-reality task involving an aircraft engine. Therefore, this type of direct physical interaction or passive haptic feedback not only improves immersion but also performance in some instances.

Henderson, Steven & Feiner, Steven. (2008). Opportunistic Controls: Leveraging natural affordances as tangible user interfaces for augmented reality. Proceedings of the ACM Symposium on Virtual Reality Software and Technology, VRST. 211-218. 10.1145/1450579.1450625.

Design Challenges

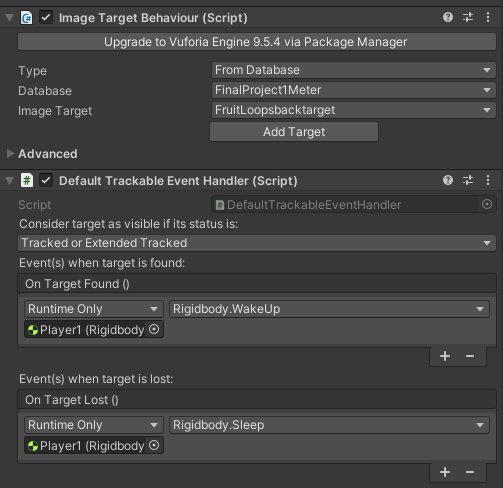

I first created a bare play surface with boundary walls for testing purposes and wrote C# scripts for item pickups, audio cues, and hidden scene-transition objects that would only appear after all ten coin pickups were collected. The first problem arose when I discovered that the player object simply fell through the playing surface on startup. This took some time to diagnose, but ultimately I determined that the player's spherical rigidbody and Unity physics were being initiated immediately while the image target detection was slightly delayed. This meant that once the play surface was rendered, the player model had already fallen beneath it due to gravity. The solution was in Vuforia’s “Default Trackable Event Handler” script. This script contained an option to trigger sleep/wake events based on whether or not the image target was found or lost. This allowed for the image target to be acquired before initiating the rigidbody and the associated effects of gravity.

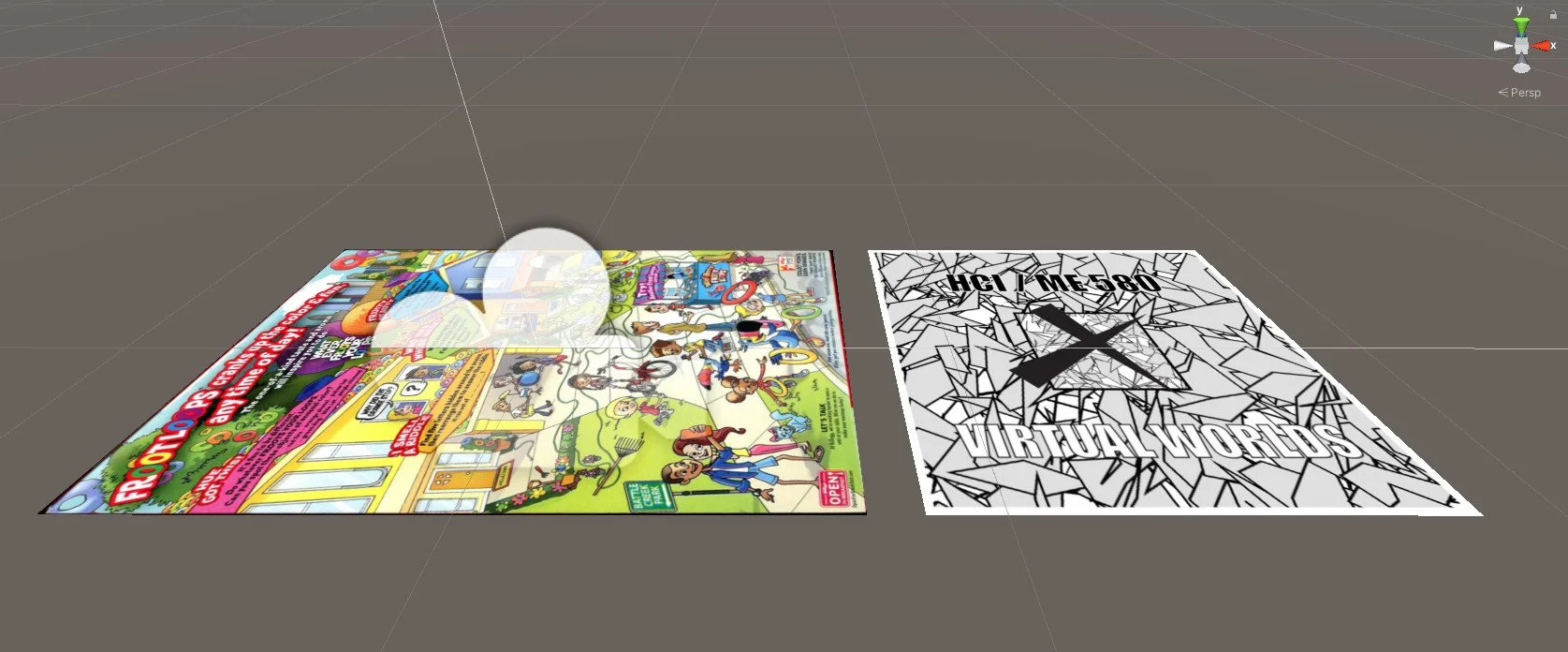

Gravity

The next major issue came when attempting to get the player model to roll around the playing surface in a realistic manner. The “Vuforia Behavior” script has an option for “World Center Mode.” By default, this is set to “Device,” which in this case is the webcam. This created a situation where the player model exhibited some strange behavior. The rigibody would sometimes defy gravity and roll in the opposite direction of the tilt and, at other times, seemed to wildly change position without warning. One possible solution would have been to calculate gravity based on a fixed camera angle. For instance, if the camera maintained a 45-degree downward angle, then gravity could be calculated by accounting for this angle, provided the exact camera position was maintained at all times. This was limiting, especially in the event that the camera in question was a handheld smartphone. Instead, I implemented a secondary target to act as the “World Center,” which would lay on a flat surface next to the primary target. In essence, this allowed Unity to orient itself in relation to the secondary target. In other words, as long as the secondary target remained in view and stationary, Unity recognized any tilting of the primary target as being off-center, and gravity worked realistically and as expected.

03

Level Design:

Leveraging Unity assets for thematically distinct level designs

Level Design

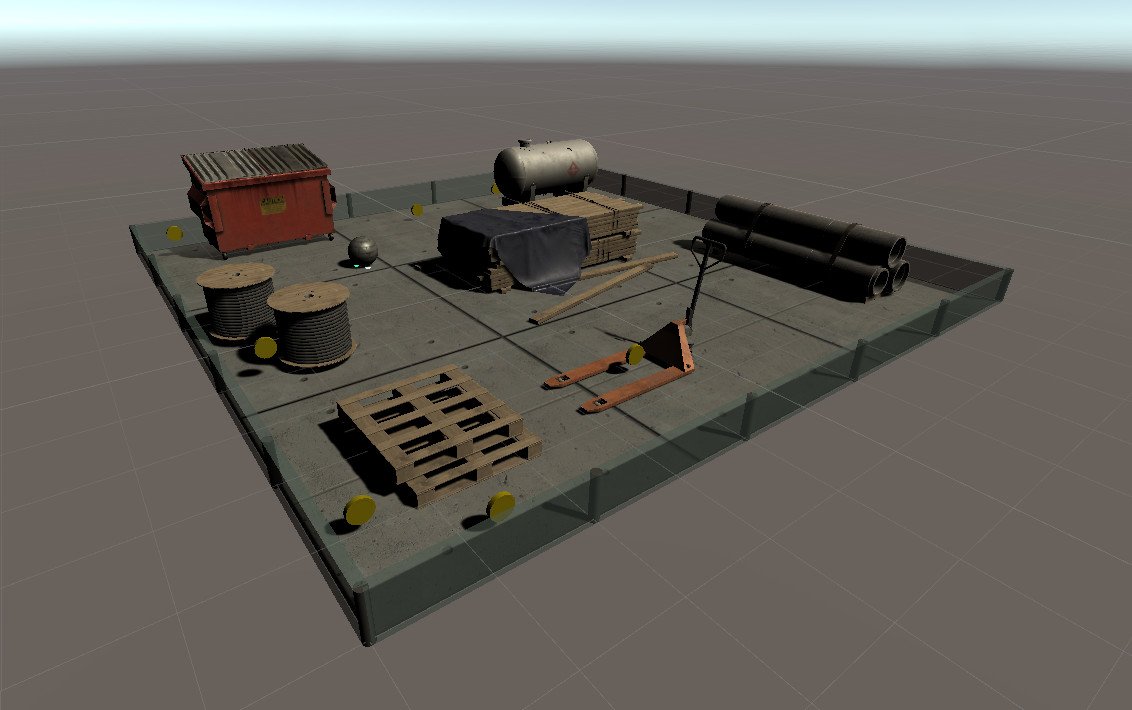

With the game’s general functionality now operating correctly, it was time to add background textures, models, and lighting effects to three separate and distinct levels. The goal of these elements was to both add challenge to the levels via navigation and hiding of collectibles and to create aesthetically pleasing visual themes for each level.

Level 1

An urban/industrial theme conceived with Unity materials such as concrete, and metal textures as well as industrial models.

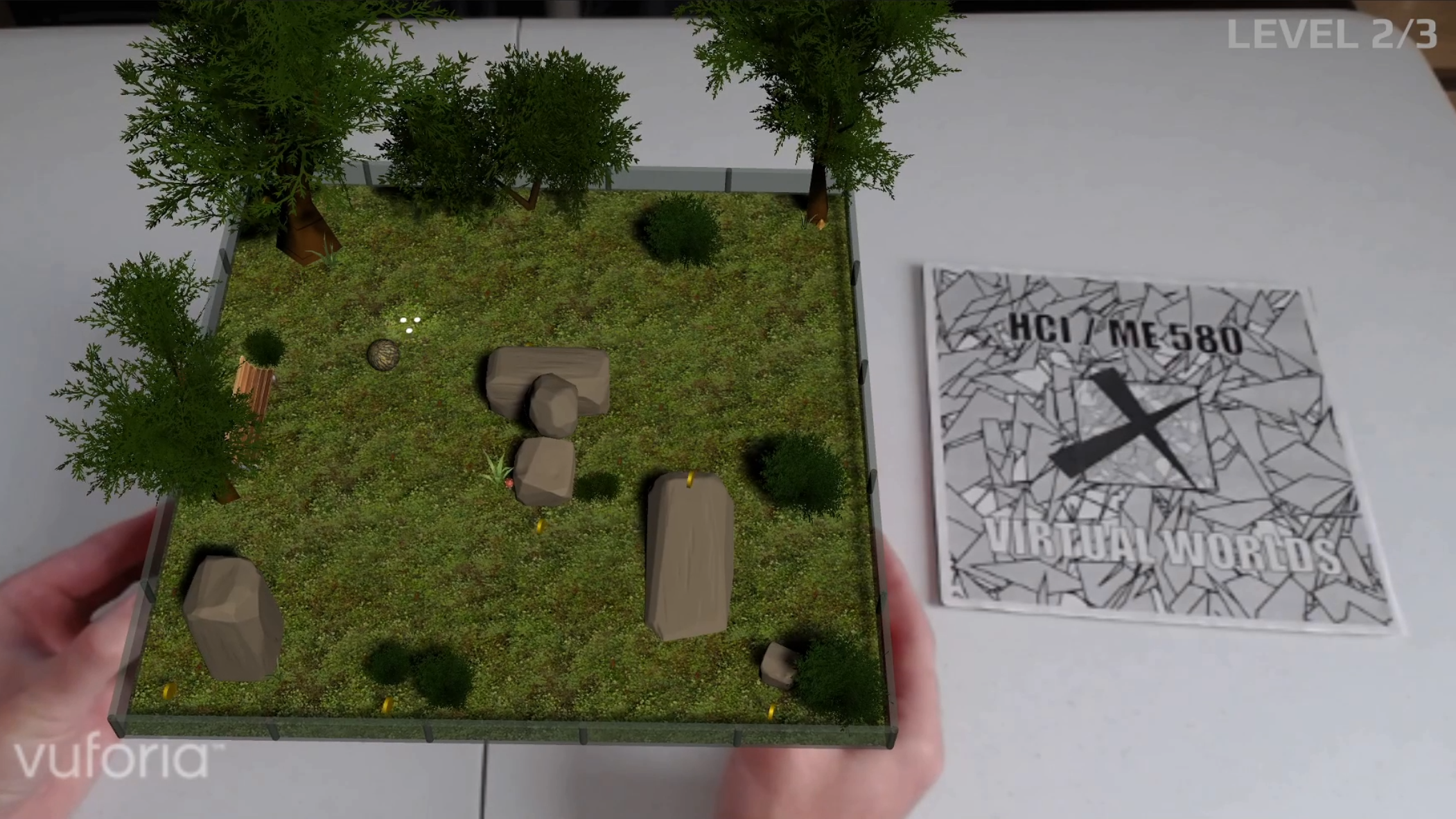

Level 2

A nature/park/greenspace themed level with grass textures, trees, and boulders with custom colliders.

Level 3

A darkened sci-fi themed level with glowing assets and point lighting for the player model.

Full Demo Video (4 min)

04

Conclusion:

Successes, learnings, and areas for improvement

Tactile Interface

The project's central focus was on the extra layer of immersion provided by the physical manipulation of a cereal box to control the player model. This form of passive haptic feedback is arguably the most compelling aspect of the project and significantly adds to the level of engagement and sense of presence. In investigating strategies used to increase immersion in virtual environments, these forms of feedback (other than the standard visual or auditory stimuli) were seen in the research. Garcia-Valle et al. (2017) endeavored to determine the level of immersion, presence, and realism that would be afforded by the inclusion of a haptic vest equipped with both tactile and thermal actuators. The study found that participants deemed the additional physical feedback to be beneficial during a virtual reality simulation of a train crash. Valkov et al. (2017) conducted a laboratory study that slowly blended the real world with that of a virtual environment in what they termed “smooth immersion” They found that participants who entered through a smooth transition felt more confident in the virtual world, moved more quickly within it, and kept smaller safety distances to real-world objects. Finally, Löchtefeld et al. (2017) incorporated the idea of “Tangible User Interfaces” in a map-based experiment. TUI’s were described as interfaces that utilize the human ability to grasp and manipulate physical objects by incorporating them as a means of manipulating digital information. In this case, they found that spatial recall of building locations was 24.5% more accurate when participants were allowed to manipulate 3D-printed models of the buildings as opposed to simply using a touch screen. These experimental examples highlighting the benefit of direct physical interaction, haptic feedback, and mixing of real and virtual elements all support the idea of improved immersion which wound up becoming one of the central features of this project.

Garcia, Gonzalo & Ferre, Manuel & Breñosa, Jose & Vargas, David. (2017). Evaluation of Presence in Virtual Environments: Haptic Vest and User’s Haptic Skills. IEEE Access. PP. 1-1. 10.1109/ACCESS.2017.2782254.

Löchtefeld, Markus & Wiehr, Frederik & Gehring, Sven. (2017). Analysing the effect of tangibile user interfaces on spatial memory. 78-81. 10.1145/3131277.3132172.

Valkov, Dimitar & Flagge, Steffen. (2017). Smooth immersion: the benefits of making the transition to virtual environments a continuous process. 12-19. 10.1145/3131277.3132183.

Areas for Improvement

Although I consider this project a success, the time constraints and my initial unfamiliarity with the development tools leave a few areas open to improvement and expansion. This project utilized a webcam mounted on a tripod for demonstration purposes, and any implementation on a smartphone would be awkward to hold while manipulating the target. In the future, however, if lightweight wearable tech becomes ubiquitous, these augmented and mixed reality experiences will become commonplace and, in my opinion, will be far more valuable in real-world applications than fully virtual environments. Regarding the handling of gravity calculations for the final product, the secondary world-centering target was effective but came with some drawbacks. The secondary target adds an additional requirement for operation and must remain in view. Future iterations might attempt to include automatic ground plane detection that would take cues from the surrounding environment to establish a baseline level for gravity to be applied to the playing surface without the need for additional image targets. Finally, if more time were available, I would have liked to add some extra levels, a more in-depth score counter, unique point values for pickups, and possibly even bonus points for speed of level completion. These features were difficult to implement for this project due to the fact that I was using the Unity scene manager for level progression, and carrying data across multiple scenes required more complex scripting solutions than the time allowed for.

Final Thoughts

I wanted to include this mixed reality project as a somewhat atypical case study with the hope that it would show my range beyond more traditional two-dimensional experiences and my ability to leverage and quickly adapt to unfamiliar development tools. Designing for extended reality is a relatively new and exciting medium that will hopefully reach critical mass once the underlying tech evolves to become less obtrusive. It was also refreshing to creatively ideate within a fledgling design space harboring fewer guide rails and established norms.